On Nov. 13, I posted a graph showing the fast growth in the requested bytes in RSS and similar feeds from my Wi-Fi Networking News and a few (much smaller) other sites. The bandwidth usage showed a growth from the mid-200 MB per day range up to about 350 MB per average per day. During that same time, I wasn't seeing an increase in visitors of that scale--maybe 10 to 20 percent, not 75 percent.

After analyzing logs, I discovered that a small percentage of aggregation sites and aggregation servers were requesting as much as 20 to 30 percent of the bandwidth unnecessarily through aggressive downloads that didn't check the If-Modified-Since headers or other tools to prevent a retrieval of a page that hadn't changed.

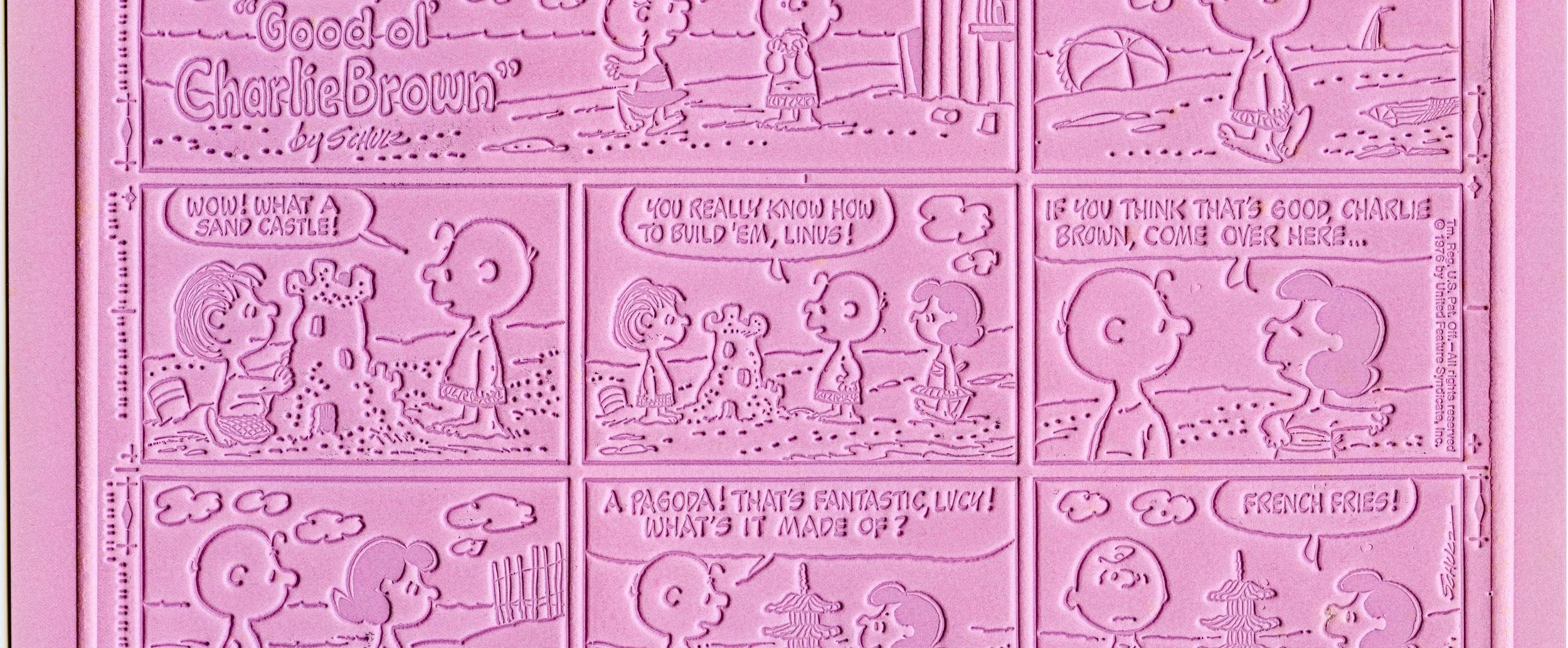

I built a simple program running via Apache that throttles RSS downloads: a given IP and user agent combination can only request a given RSS feed file if it's changed since they last retrieved it. Pretty simple. But the effects are profound, as this graph shows.

As you can see, I threw the switch on Nov. 20, just before Thanksgiving, but I haven't seen a real decline in readership at my Wi-Fi site or the other sites--just a decline in bandwidth. The average (with lots of posts over the last week or so, meaning more RSS retrievals because of the update) is back to about 200 MB.

This reveals a lot about the sloppiness of some of the aggregators out there. Right now, my top aggregator is Mozilla (Firefox, primarily), which makes perfect sense: there are a lot of people using the RSS button in Firefox to subscribe to my feed, and if it's the top engine that's because of many unique users.

Since I pay by the gigabyte for overages above my minimum (which I've hit), this change will save me a reasonable pittance: probably $10 or $12 per month. Sounds like someone needs to build a master site for testing aggregation competence so that aggregator software developers can test this, and users and Web site operators can report on it back to developers.